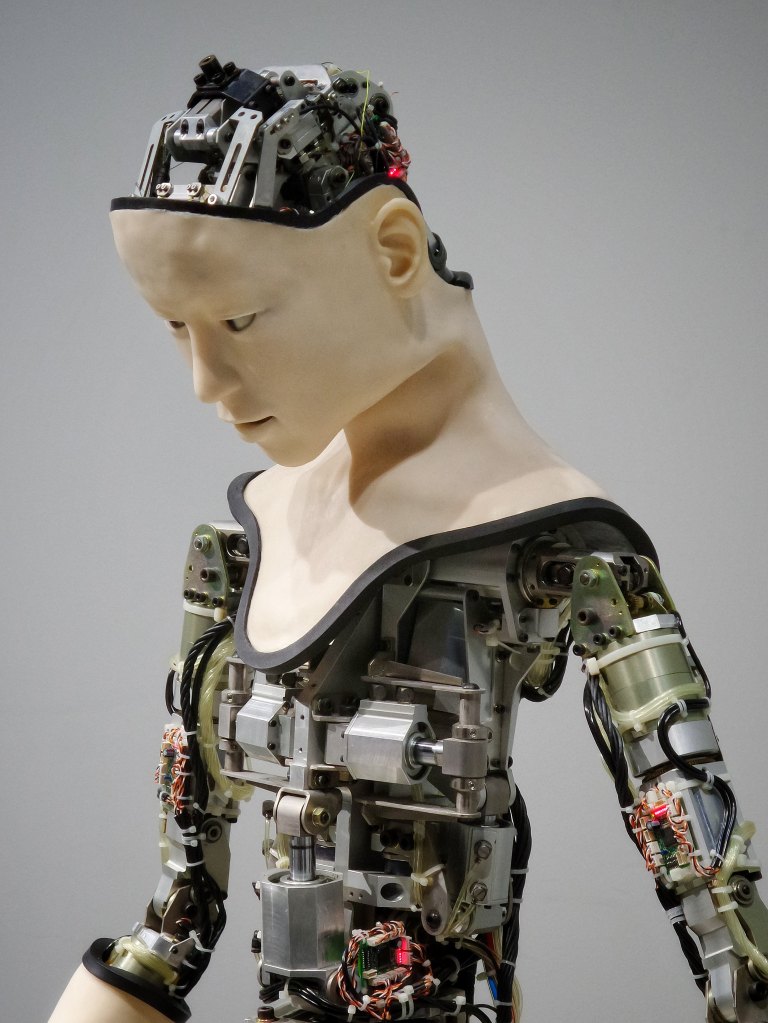

Okay, so the other day I tried it. I’ve been resisting, immediately scrolling past the AI suggestions at the top of a Google search. I don’t want some program pretending it’s human to provide me with information I need. I had to find an expert on a topic. It was an obscure topic, but if you’re reading this blog that’ll come as no surprise. Tired of running into brick walls using other methods, I glanced toward Al. Al said a certain Joe Doe is an expert on the topic. I googled him only to learn he’d died over a century ago. Al doesn’t understand death because it’s something a machine doesn’t experience. Sure, we say “my car died,” but what we mean is that it ceased to function. Death is the overlay we humans put on it to understand, succinctly, what happened.

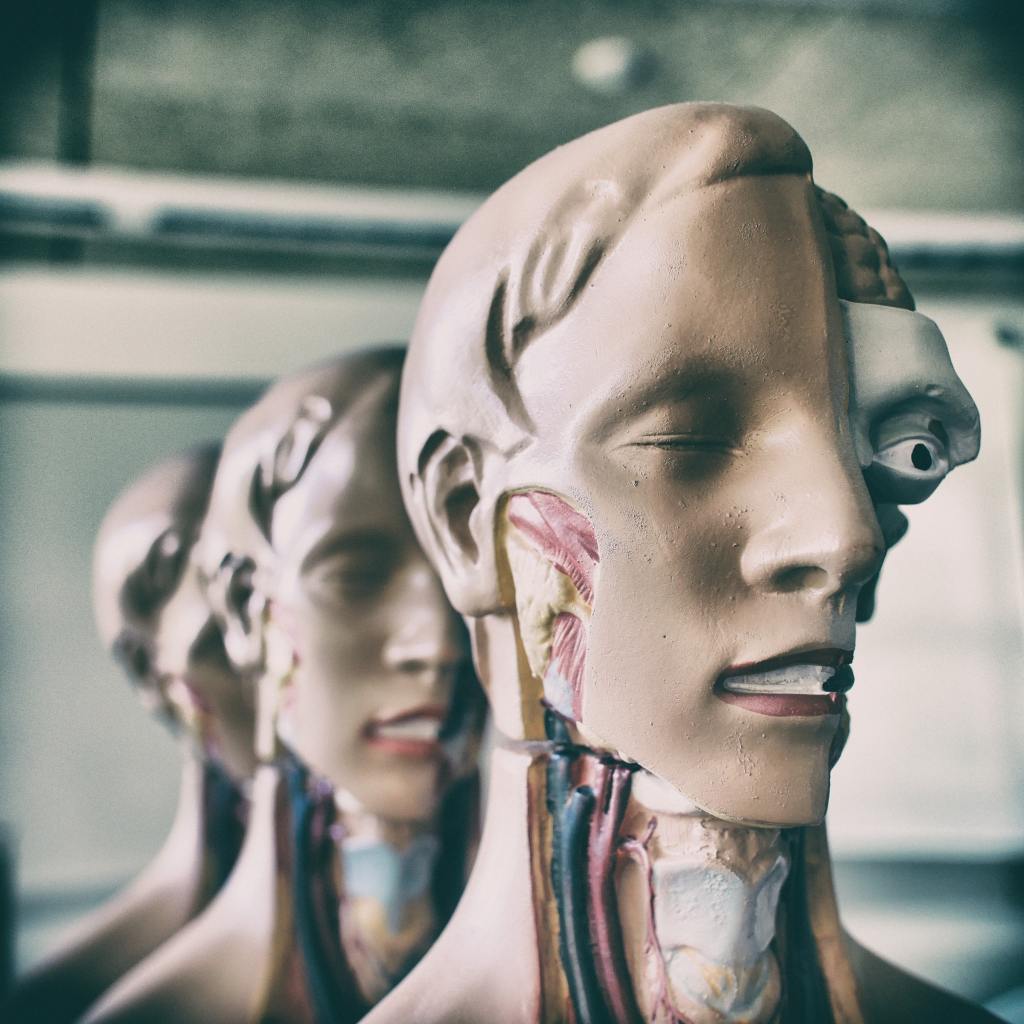

Brains are not computers and computers do not “think” like biological entities do. We have feelings in our thoughts. I have been sad when a beloved appliance or vehicle “died.” I know that for human beings that final terminus is kind of a non-negotiable about existence. Animals often recognize death and react to it, but we have no way of knowing what they think about it. Think they do, however. That’s more than we can say about ones and zeroes. They can be made to imitate some thought processes. Some of us, however, won’t even let the grocery store runners choose our food for us. We want to evaluate the quality ourselves. And having read Zen and the Art of Motorcycle Maintenance, I have to wonder if “quality” is something a machine can “understand.”

Wisdom is something we grow into. It only comes with biological existence, with all its limitations. It is observation, reflection, evaluation, based on sensory and psychological input. What psychological “profile” are we giving Al? Is he neurotypical or neurodivergent? Is he young or does his back hurt when he stands up too quickly? Is he healthy or does he daily deal with a long-term disease? Does he live to travel or would he prefer to stay home? How cold is “too cold” for him to go outside? These are things we can process while making breakfast. Al, meanwhile, is simply gathering data from the internet—that always reliable source—and spewing it back at us after reconstructing it in a non-peer-reviewed way. And Al can’t be of much help if he doesn’t understand that consulting a dead expert on a current issue is about as pointless as trying to replicate a human mind.