How do you know someone without ever seeing them? How do you know they are who they say they are? I’ve been spending a lot of time on the phone, much of it trying to establish my identity with people who don’t know me. This has happened so much that I’m beginning to wonder how many of the people I’m talking to are who they say they are. I never was a very good dater. Going out, you’re constantly assessing how much to reveal and how much to conceal. And your date is doing the same. We can never fully know another person. I tend to be quite honest and most of the coeds in college said I was too intense. I suppose that it’s a good thing my wife and I had only one date in our three-year relationship before deciding to get married.

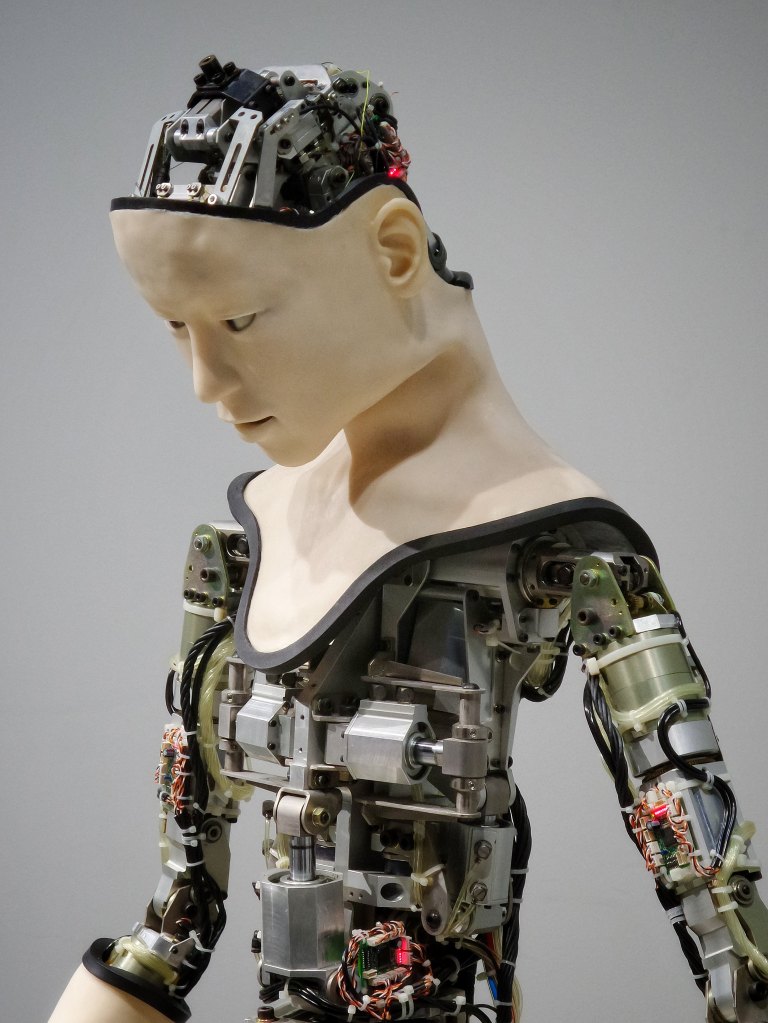

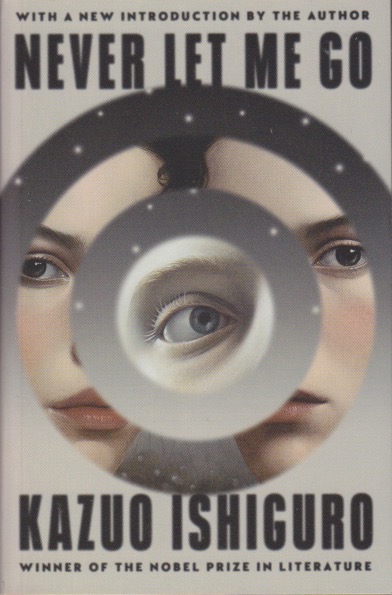

Electronic life makes it very difficult to know other people for sure. I don’t really trust the guardrails that have been put up. Sometimes the entire web-world feels false. But can we ever go back to the time before? Printing out manuscripts and sending them by mail to a publisher, waiting weeks to hear that it was even received? Planning trips with a map and dead reckoning? Looking telephone numbers up in an unwieldy, cheaply printed book? You could assess who it is you were talking to, not always accurately, of course, but if you saw the same person again you might well recognize them. Anthropologists and sociologists tell us the ideal human community has about 150 members. The problem is, when such communities come into contact with other communities, war is a likely outcome. So we have to learn to trust those we can’t see. That we’ll never see. That will only be voices on a phone or words in an email or text.

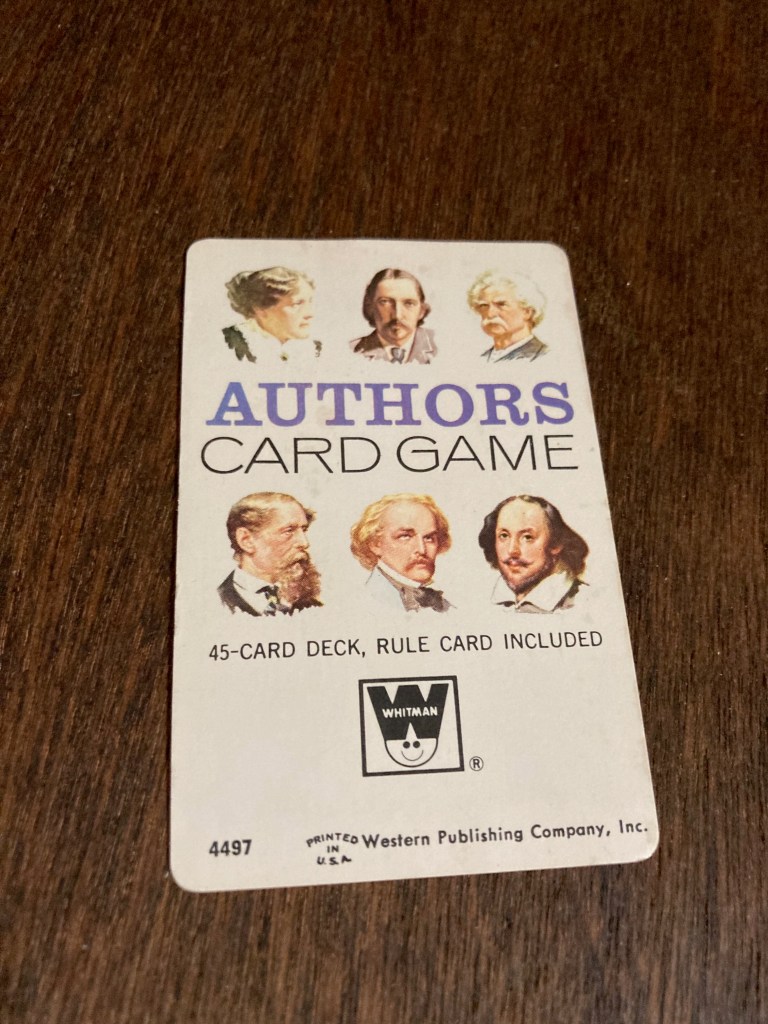

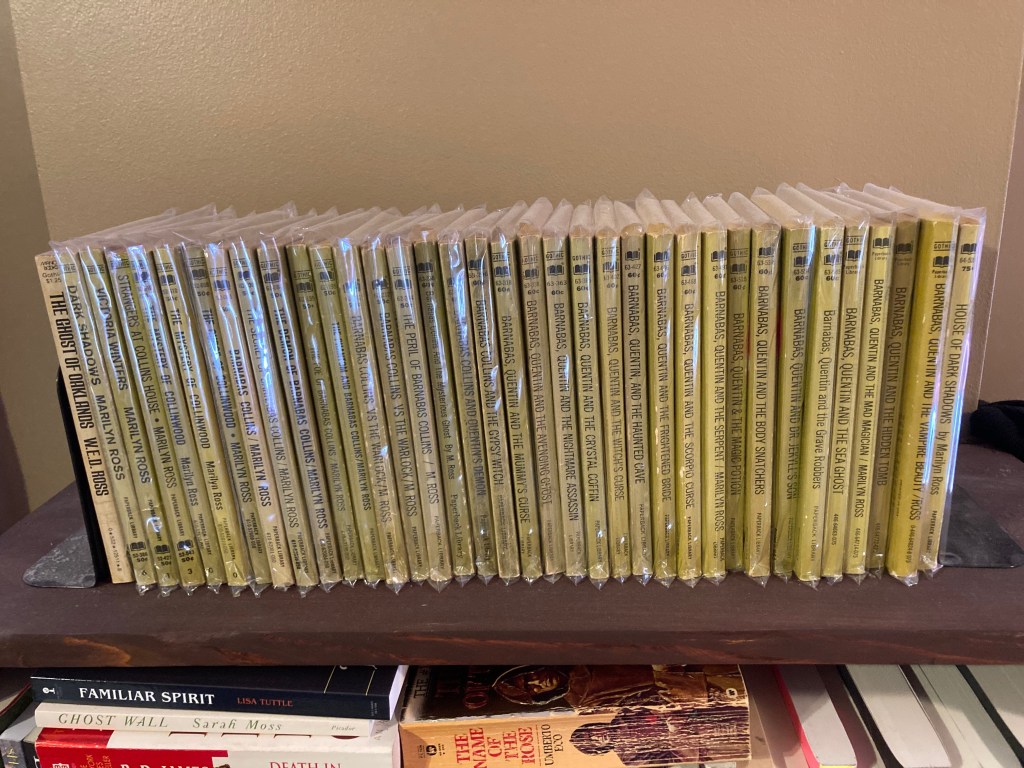

I occasionally get people emailing me about my academic work. Sometimes these turn out to be someone who’s hacked someone else’s account. I wonder why they could possibly have any interest in emailing an obscure ex-academic unfluencer like me. What’s their endgame? Who are they? There’s something to be said for the in-person gathering where you see the same faces week after week. You get to know a bit about a person and what their motivations might be. Ours is an uncertain cyber-world. I have come to know genuine friends this way. But I’ve also “met” plenty of people who’re not who they claim to be. Knowing who they really are is merely a dream.