This one’s so good that it’s got to be a hoax. One of the upsides to living under constant surveillance is that a lot of stuff—weird stuff—is caught on camera. I admit to dipping into Coast to Coast once in a while. (This, originally radio, show [Coast to Coast AM] was well known for paranormal interests long before Mulder and Scully came along.) It was there that I learned of a viral video showing devices praying together during the night in Mexico City. The purported story is that a security guard in a department store came upon electronic devices reciting the Chaplet of the Divine Mercy. One device seems to be leading the other devices in prayer. Skeptics have pointed out that this could’ve been programmed in advance as a kind of practical joke on the security guard, but it made me wonder.

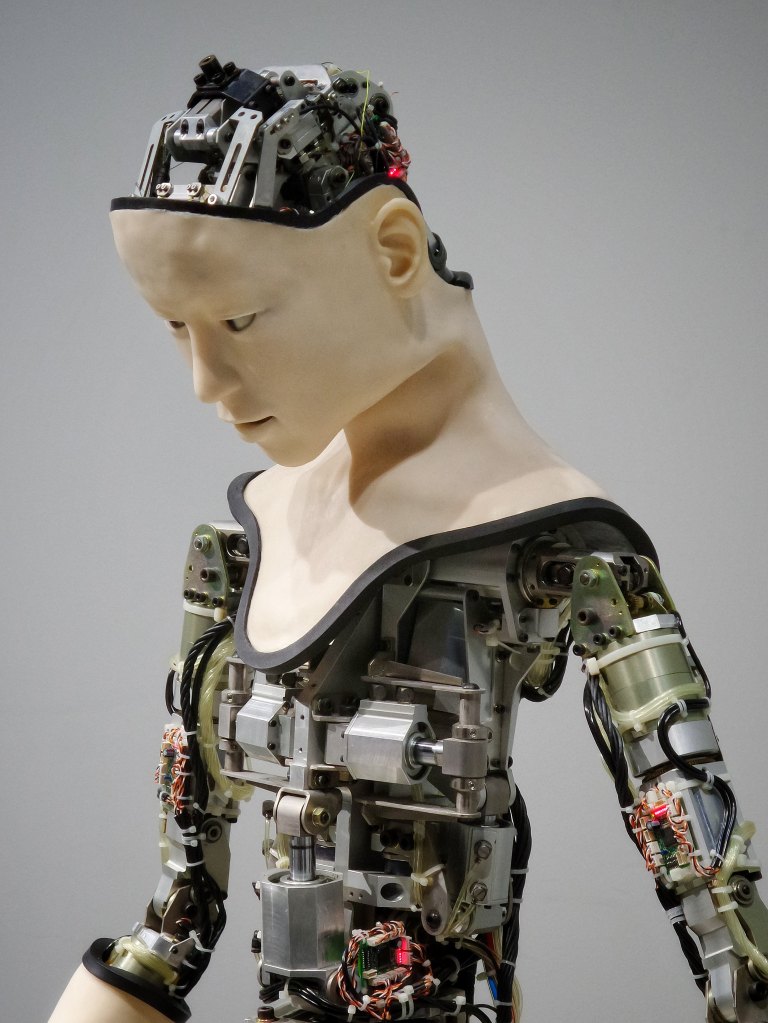

I’m no techie. I can’t even figure out how to get back to podcasting. I do, however, enjoy the strange stories of electronic “consciousness.” I use the phrase advisedly since we don’t know what human, animal, and plant consciousness is. We just know it exists. I am told, by those who understand tech better than I do, that computers have been discovered “conversing” with each other in a secret language that even their programmers can’t decipher. And since devices don’t follow our sleep schedules, who knows what they might get up to in the middle of the night when left to their own devices? Why not hold a prayer service? The people they surveil all day do such things. Since the video hit the web not long before Easter, with its late-night services, it kind of makes sense in its own bizarre way.

As I say, this seems to be one of those oddities that is simply too good to be true. But still, driving along chatting to my family in the car, some voice-recognition software will sometimes join in with a non sequitur. As if it just wants to do what humans do. I don’t mean to be creepy here, but it may be that playing Pandora with “artificial intelligence” is dicey when we can’t define biological intelligence. I’ve said before that AI doesn’t understand God talk. But if AI is teaching itself by watching what humans post—which is just about everything that humans do—maybe it has learned to recite prayers without understanding the underlying concepts. Human beings do so all the time.